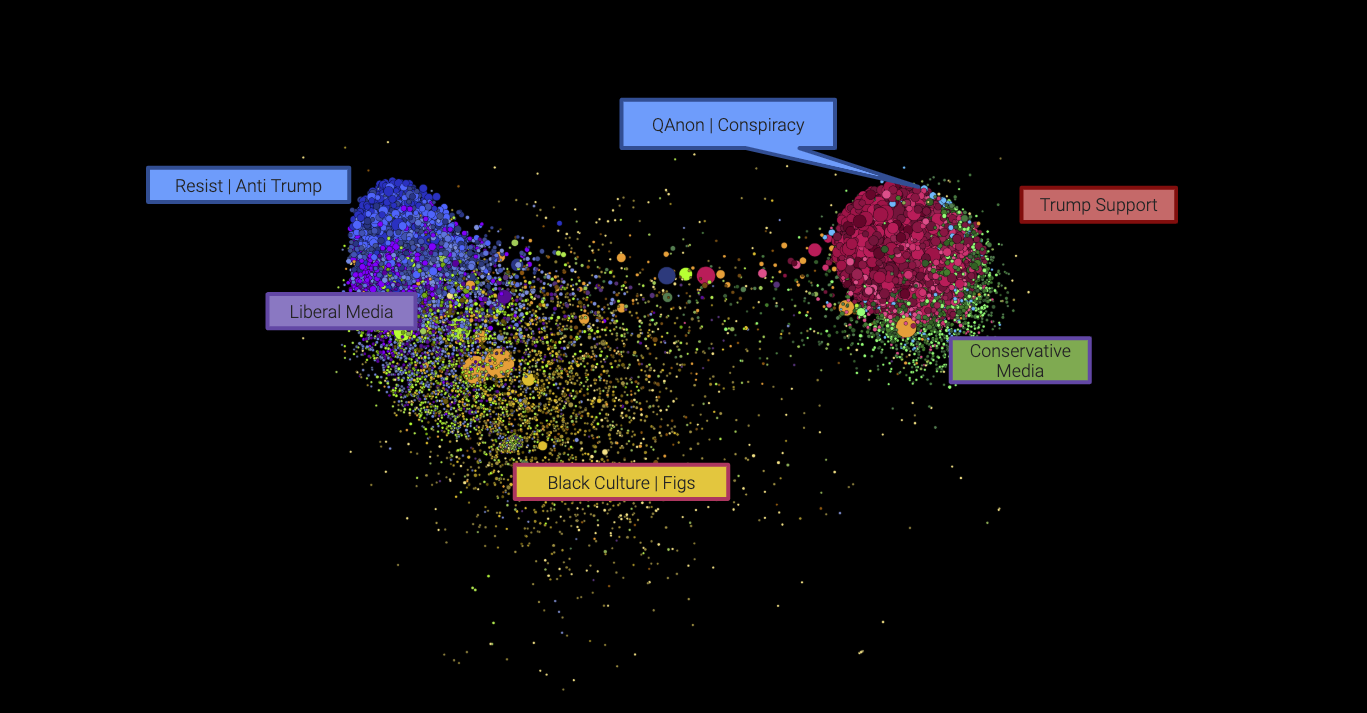

Graphika

The Institute for Strategic Dialogue, a London-based think-tank, is warning that ‘disinformation is becoming normalized‘ in elections, adding that it was ‘taken aback’ by the level of deceit in the 2019 general election campaign.

“This election has been marinated in mendacity: big lies and small lies; quarter truths and pseudo-facts; distortion, dissembling and disinformation; and digital skulduggery on an industrial scale,” writes the Economist (HT:CFR).

The Russian Federation, as well as organizations, individuals and UK-based entities, attempted to influence the British election by spreading disinformation to alter voter perceptions of the candidates, the Soufan Center adds. The weaponization of ‘deep-fake’ technology in politics and elections in the UK is indicative that disinformation campaigns are becoming a mainstream tactic in the political party playbook, its Cipher Brief reports:

The Russian Federation, as well as organizations, individuals and UK-based entities, attempted to influence the British election by spreading disinformation to alter voter perceptions of the candidates, the Soufan Center adds. The weaponization of ‘deep-fake’ technology in politics and elections in the UK is indicative that disinformation campaigns are becoming a mainstream tactic in the political party playbook, its Cipher Brief reports:

Graphika* a firm that tracks online disinformation campaigns, noted that the Reddit campaign was orchestrated by the Russian Federation. …As these disinformation efforts become more pervasive and occur alongside state-driven disinformation campaigns, it may become increasingly difficult to assess the origins of election meddling. State actors, in fact, may now be in a position where they can decrease disinformation campaigns because their tactics have been co-opted by individuals and political parties. This concern is amplified when social media platforms like Facebook adopt policies that allow for false information in political ads.

“It’s the democratization of misinformation,” said Jacob Davey, a senior researcher at the Institute, a group that monitors global disinformation campaigns. “We’re seeing anyone and everyone picking up these tactics.”

Worldwide, billions of people are exposed to online disinformation, notes researcher Emily Cole, citing a new report which found that 70 states have engaged in coordinated disinformation campaigns on social media in 2019, most often in support of ruling politicians or political parties. They utilize disinformation in three distinct ways: “to suppress fundamental human rights, discredit political opponents, and drown out dissenting opinions,” recent research suggests.

Worldwide, billions of people are exposed to online disinformation, notes researcher Emily Cole, citing a new report which found that 70 states have engaged in coordinated disinformation campaigns on social media in 2019, most often in support of ruling politicians or political parties. They utilize disinformation in three distinct ways: “to suppress fundamental human rights, discredit political opponents, and drown out dissenting opinions,” recent research suggests.

Well before the term “hybrid war” was coined, the Kremlin pursued a strategy of subverting and seizing the territories of former satellites, CEPA’s Janusz Bugajski writes. Moscow’s approach to its neighbors is an international variant of the “salami tactics” applied by communist regimes to divide and eliminate domestic political opposition. Through a combination of targeted disinformation, diplomatic lawfare, and military action, Russian officials have been successful not only in carving up neighboring states but also in manipulating international players to legitimize their conquests.

The first rule of fighting disinformation? Think before you share. And, when in doubt, don’t share at all, USA TODAY’s Jessica Guynn reports.

Avoid the ‘tribal trap’

Whether you are worried about being hoodwinked by disinformation or not, here’s a good tip: Don’t forget your – or someone else’s – humanity, Guynn adds. Disinformation expert Ben Nimmo calls it the “tribal trap.” We dehumanize the person whose political views we reject. Challenge yourself to find one thing in common with the person who has posted something you disagree with, he advises.

“It’s the first step in preventing the cycle of anger that makes an easy target for disinformation,” he says. “Meeting people in real life is much more nuanced: You don’t just hear someone’s political views, you also hear their accent, and see their face and their body language; maybe they show what sports team they support, or where they went to school. Those are all things that make them more human.”

“It’s the first step in preventing the cycle of anger that makes an easy target for disinformation,” he says. “Meeting people in real life is much more nuanced: You don’t just hear someone’s political views, you also hear their accent, and see their face and their body language; maybe they show what sports team they support, or where they went to school. Those are all things that make them more human.”

“People are much easier to manipulate when they’re angry or afraid. Con artists have known that for centuries,” adds Nimmo, director of investigations at Graphika, the social media analytics firm. “If you see a headline that makes you outraged or scared, ask yourself: Who’s trying to trick me?”

Politicians who parrot Russian propaganda fall into three categories, argues DailyBeast columnist Julia Davis:

- the Cynical (they know better but peddle the lies anyway)

- the Useful Idiots (easily manipulated)

- the Compromised (Russian assets who do Moscow’s bidding because they were recruited).

Researchers at Ohio State found that even when people are provided with accurate numerical information, they tend to misremember those numbers to match whatever beliefs they already hold, Nieman Lab’s Laura Hazard Owen writes: “For example, when people are shown that the number of Mexican immigrants in the United States declined recently — which is true but goes against many people’s beliefs — they tend to remember the opposite,” OSU’s Jeff Grabmeier reports in an article summarizing the research (the full paper is here).

Researchers at Ohio State found that even when people are provided with accurate numerical information, they tend to misremember those numbers to match whatever beliefs they already hold, Nieman Lab’s Laura Hazard Owen writes: “For example, when people are shown that the number of Mexican immigrants in the United States declined recently — which is true but goes against many people’s beliefs — they tend to remember the opposite,” OSU’s Jeff Grabmeier reports in an article summarizing the research (the full paper is here).

Political disinformation campaigners range from high-end million-dollar advertising companies to midrange companies who hire young university students casually to young sole traders, entrepreneurs who understand the digital realm and strategically create and pay Facebook to boost disinformation content.

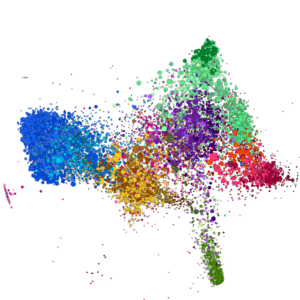

Graphika

“Strategic intent is not strategic impact”

Digital disinformation campaigns [addressed in a recent podcast from the National Endowment for Democracy’s (NED) International Forum] can be large and organized — and still have very little impact on their targets, writes David Karpf, an associate professor of media and public affairs at George Washington University, in MediaWell, a unit of the Social Science Research Council,

The National Democratic institute seeks a Director: Technology and Democracy (other vacancies here).

*Graphika leverages artificial intelligence to create detailed maps of social media landscapes, employing new analytical methods and tools to help partners navigate complex online networks.