The Fireworks of Pro-Kremlin Disinformation. EU vs. Disinfo

With platforms such as Facebook and Twitter, modern-day purveyors of disinformation need only a computer or smartphone and an internet connection to reach a potentially huge audience — openly, anonymously or disguised as someone or something else, such as a genuine grassroots movement, notes Bloomberg analyst Shelly Banjo.

In addition, armies of people, known as trolls, and so-called internet bots — software that performs automated tasks quickly — can be deployed to drive large-scale disinformation campaigns, she writes for The Post in a useful digest of the issues underlying the ‘Digital Disinformation Mess.’

![]() The digital systems we use every day are dangerous to democracy, argues Ramesh Srinivasan, a professor at the University of California at Los Angeles, director of the UC Digital Cultures Lab and author of the forthcoming book “Beyond the Valley.” This is because the voices that create and monetize them are extremely limited — paving the way for a few tech billionaires to create the empires that monitor us daily, giving them the potential to manipulate our behavior.

The digital systems we use every day are dangerous to democracy, argues Ramesh Srinivasan, a professor at the University of California at Los Angeles, director of the UC Digital Cultures Lab and author of the forthcoming book “Beyond the Valley.” This is because the voices that create and monetize them are extremely limited — paving the way for a few tech billionaires to create the empires that monitor us daily, giving them the potential to manipulate our behavior.

Google, Facebook, Amazon and other companies can support democracy by entering into a new social contract, both to serve the public interest and to support our own sovereignty as users, he writes for The Post’s Monkey Cage.

Facebook’s plan to hire professional journalists instead of relying solely on algorithms to deliver news is a positive step but is unlikely to shake up an embattled media industry, analysts say.

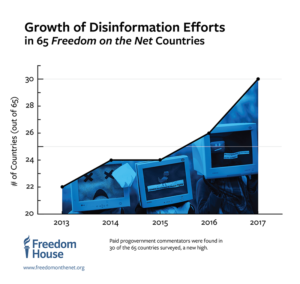

Freedom House

Social media and the internet have opened up new ways for hostile powers to directly abuse and influence individuals in democratic societies. False news, information operations, and online propaganda pose significant but distinct threats to the functioning of a democracy, adds Adrian Shahbaz, Freedom House Research Director for Technology and Democracy.

Recommended responses would include:

- Remove content that is deliberately and unequivocally false under policies designed to combat spam or unauthorized use of the platform.

- Label or eliminate automated “bot” accounts. Recognizing that bots can be used for both helpful and harmful purposes, and acknowledging their role in spreading disinformation, companies should strive to provide clear labeling for suspected bot accounts. Those that remain harmful even if labeled should be eliminated from the platform.

- Do not remove state-owned media that function as propaganda outlets for hostile powers, unless they violate the platform’s terms of service through actions such as those cited above. RTWT

National Democratic Institute

Anti-

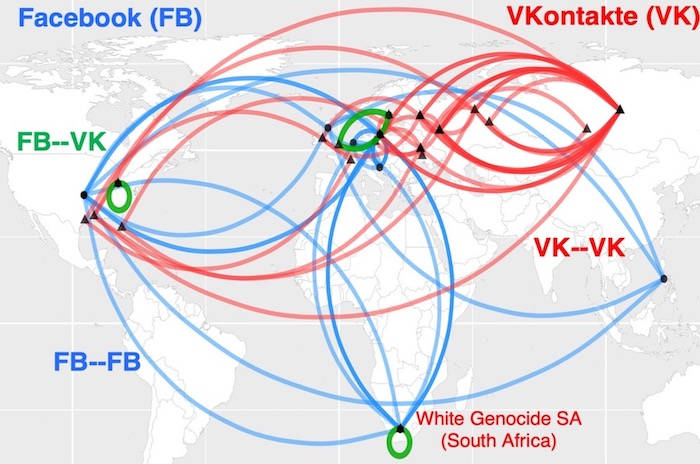

Policing on-line hate from within a single platform, such as Facebook, can make the spread of hate worse, and will eventually generate global ‘dark pools’ in which hate will flourish, is one of the findings in a recent scientific paper in Nature, notes analyst Steve Bush.

The paper was written after analysis using a “first of its kind” mapping technique (below), according to George Washington University, which worked with the University of Miami. “We set out to get to the bottom of on-line hate by looking at why it is so resilient and how it can be better tackled,” said project lead Neil Johnson.

In the wake of regular protests in Russia and the recent nuclear-powered missile accident, the Kremlin has been relying on concealment, another tactic in its disinformation toolkit, the European Values think-tank reports.

Disinformation Review.

The myth of Soviet occupation as liberation, perpetuated by the pro-Kremlin media, aims to erase from memory the mass repressions and suffering of millions, including Russians, at the hands of the Soviet regime, the EU East StratCom Task Force adds:

It enables pro-Kremlin disinformation to portray those who do not glorify the Soviet Union and refuse to see themselves as subjects of Moscow’s “sphere of influence” as Russophobes and fascists with a distorted historical memory. And when these narratives fall short, the pro-Kremlin media tries to paint an even gloomier picture of life outside Moscow’s reach: for example, by portraying the Baltic States as desolate lands about to be turned into military bases or shelter for African and Asian migrants. But what is the pro-Kremlin media to do when it cannot fall back on reliable disinformation narratives of fascism and Russophobia?

CSIS

China has taken a page from Russia’s playbook as Beijing systematically wages an online disinformation campaign aimed at inflating support for Hong Kong’s ruling authorities, The Cipher Brief adds:

- Beijing has deployed a relentless disinformation campaign on Twitter and Facebook powered by unknown numbers of bots, trolls, and so-called ‘sock puppets.’

- Twitter shut down nearly 1000 accounts operated by the Chinese government that engaged in anti-protest propaganda, while Facebook removed numerous pages, groups, and accounts involved in ‘coordinated inauthentic behavior.’

- China’s behavior will likely grow more aggressive in both the physical and virtual realms, using on-the-ground actions to complement an intensifying cyber campaign characterized by disinformation, deflection, and obfuscation. Get the full Intel Brief here.

George Washington University