Hackers linked to Russia’s government tried to target the websites of two U.S. think-tanks, suggesting they were broadening their attacks in the build-up to November elections, according to Microsoft, Reuters reports.

Hackers linked to Russia’s government tried to target the websites of two U.S. think-tanks, suggesting they were broadening their attacks in the build-up to November elections, according to Microsoft, Reuters reports.

In a report scheduled for release on Tuesday, Microsoft said that it identified and seized websites created by Kremlin-linked hackers. The sites were designed to give users the impression that they were clicking links managed by the Hudson Institute and the International Republican Institute, a core institute of the National Endowment for Democracy, but they were then covertly redirected to web pages created by the hackers to steal passwords and other credentials.

“It’s clear that democracies around the world are under attack,” Microsoft’s president, Brad Smith stated.

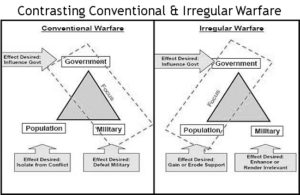

“These attacks are seeking to disrupt and divide,” he said. “There is an asymmetric risk for democratic societies. The kind of attacks we see from authoritarian regimes are seeking to fracture and splinter groups in our society.”

“These attacks are seeking to disrupt and divide,” he said. “There is an asymmetric risk for democratic societies. The kind of attacks we see from authoritarian regimes are seeking to fracture and splinter groups in our society.”

Repressive regimes are increasingly adept at using digital espionage to suppress dissent and stifle opposition at home and abroad, even in democracies, says analyst Adam Segal, highlighting a worrying trend featured in the NED’s Journal of Democracy.

Earlier this year, Columbia University’s School of International and Public Affairs held a workshop with Citizen Lab researchers to examine the digital threats facing civil society organizations, and the hurdles they encounter in mitigating them. Six challenges were identified, he writes for the Council on Foreign Relations:

Earlier this year, Columbia University’s School of International and Public Affairs held a workshop with Citizen Lab researchers to examine the digital threats facing civil society organizations, and the hurdles they encounter in mitigating them. Six challenges were identified, he writes for the Council on Foreign Relations:

- First, there is a lack of standardized data about state-sponsored cyber operations. Academics have spent time and energy on speculating about cyber conflict, but much less on collecting and analyzing empirical data. Meanwhile, independent research centers like the Citizen Lab and nonprofits like the Electronic Frontier Foundation, Human Rights Watch, and Access Now have created an invaluable body of reporting on targeted threats to CSOs. These organizations contribute high quality research but their output is limited given funding and manpower constraints. The private cybersecurity industry is much better funded and collects enormous data on targeted threats, but it is driven by business interests that do not typically prioritize civil society.

- Second, targeted digital threats to CSOs are not a local problem limited to authoritarian countries, but a global threat as these regimes increasingly target civil society across borders. The following chart shows data from an analysis of public threat reporting by cybersecurity firms and independent research centers (paper in collaboration with Ron Deibert forthcoming). A significant proportion of threat activity targets CSOs in liberal democracies—with the second-largest share of reported threats to CSOs located in the United States, surpassed only by China. Since civil society is vital to a functioning democracy, the trend of authoritarian regimes projecting power abroad through digital means is an assault on democracy itself.

Third, technological development has created new opportunities for surveillance and espionage that evade existing regulatory and normative frameworks. Intelligence services and the private sector can exploit this grey area by supporting the development of new markets, such as the one for commercial spyware. While there is a legal case for lawful interception, accountability is challenging since these tools require secrecy to be effective. This puts civil society in peril, as illustrated by the controversies around spyware providers like FinFisher, Hacking Team and NSO Group selling capabilities on par with leading intelligence agencies to several authoritarian regimes.

Third, technological development has created new opportunities for surveillance and espionage that evade existing regulatory and normative frameworks. Intelligence services and the private sector can exploit this grey area by supporting the development of new markets, such as the one for commercial spyware. While there is a legal case for lawful interception, accountability is challenging since these tools require secrecy to be effective. This puts civil society in peril, as illustrated by the controversies around spyware providers like FinFisher, Hacking Team and NSO Group selling capabilities on par with leading intelligence agencies to several authoritarian regimes.- Fourth, a misplaced focus on developing technological solutions when the majority of compromises in fact rely on social engineering techniques. While initiatives like the Vulnerabilities Equity Process (VEP) in the US are a potentially promising way to regulate disclosure of technical vulnerabilities, it misses the growing use of social engineering techniques that exploit vulnerabilities in behavior—such as curiosity, the human propensity to trust and socialize, and lack of care in opening email attachments. Regulating technology alone thus misses the majority of threats. In most attacks on civil society more effort is spent on effective social engineering used to trick targets into opening a malicious attachment or link rather than exploiting vulnerabilities in the technology itself, which is more expensive.

Fifth it is hard to quantify the effect of espionage on targeted CSOs, especially over the long-term. The effects of attacks on corporations and government agencies can be quantitatively measured in terms of financial losses and the amount of sensitive data being exfiltrated. Compromises affecting CSOs rarely result in financial damage, but rather arrests and persecution of the targeted individuals and their family members, as well as more long-term effects eroding trust within a community as individuals’ accounts are compromised and used to spread malware over social networks.

Fifth it is hard to quantify the effect of espionage on targeted CSOs, especially over the long-term. The effects of attacks on corporations and government agencies can be quantitatively measured in terms of financial losses and the amount of sensitive data being exfiltrated. Compromises affecting CSOs rarely result in financial damage, but rather arrests and persecution of the targeted individuals and their family members, as well as more long-term effects eroding trust within a community as individuals’ accounts are compromised and used to spread malware over social networks.- Sixth, there is no one-size-fits-all solution to mitigating the threat to CSOs. Each community is threatened and targeted differently. Tibetan diaspora organizations targeted by Chinese threat groups may face significantly different types of threats than Syrian opposition members targeted by both the regime and other non-state groups.

Other measures merit serious consideration, according to Edward Greenspon, president of the Public Policy Forum, and Taylor Owen, an assistant professor of digital media and global affairs at UBC, co-authors of the PPF report, Democracy Divided: Countering Disinformation and Hate in the Digital Public Sphere. Publishers and broadcasters should be legally obligated to inform their audiences about who purchases political ads in election campaigns, they write for The Globe and Mail:

Other measures merit serious consideration, according to Edward Greenspon, president of the Public Policy Forum, and Taylor Owen, an assistant professor of digital media and global affairs at UBC, co-authors of the PPF report, Democracy Divided: Countering Disinformation and Hate in the Digital Public Sphere. Publishers and broadcasters should be legally obligated to inform their audiences about who purchases political ads in election campaigns, they write for The Globe and Mail:

- We need to do more to make sure that individuals exercise greater sovereignty over the data collected on them and then resold to advertisers or to the Cambridge Analyticas of the world. This means data profiles must be exportable by users, algorithms and AI must be explained, and consent must be freely, clearly and repeatedly given – not coerced through denial of services.

- Platforms such as YouTube, Facebook and Twitter need to be made liable to the same legal obligations as newspapers and broadcasters for defamation, hate and the like. Some people say this would amount to governments getting into the censorship business. That’s simply wrong; newspaper publishers and editors abide by these laws – or face the consequences – without consulting government minders. These digital platforms use algorithms to perform the same functions as editors: deciding what readers will see what content and with what prominence.

There are no easy answers to any of these challenges. Nevertheless, there are a few steps that grant-making organizations, policymakers, and tech companies could take to improve the cybersecurity of CSOs, Segal adds in Eliminating a Blind Spot: The Effect of Cyber Conflict on Civil Society:

There are no easy answers to any of these challenges. Nevertheless, there are a few steps that grant-making organizations, policymakers, and tech companies could take to improve the cybersecurity of CSOs, Segal adds in Eliminating a Blind Spot: The Effect of Cyber Conflict on Civil Society:

- First, grant-making organizations could provide more funding to conduct additional research on the threat to CSOs.

- Second, governments could call out countries that conduct cyber operations against civil society groups, analogous to what the Five Eyes and others did in calling out Russia over the NotPetya ransomware incident. They should also regulate the commercial spyware industry to curb its use against human rights advocates.

- Third, establishing increased accountability mechanisms for the commercial spyware industry to identify and curb the use of these products against civil society.

- Finally, private companies should develop strategies that increase the adoption of security features (such as multi-factor authentication) to raise the cybersecurity standard for targeted and vulnerable CSOs.