Australian Strategic Policy Institute (ASPI)

Seeking to find a way to wrest control of the narrative, Beijing has stepped up its efforts to sow discord and disinformation about the months-long Hong Kong protests through some interesting methods on Twitter, QUARTZ reports:

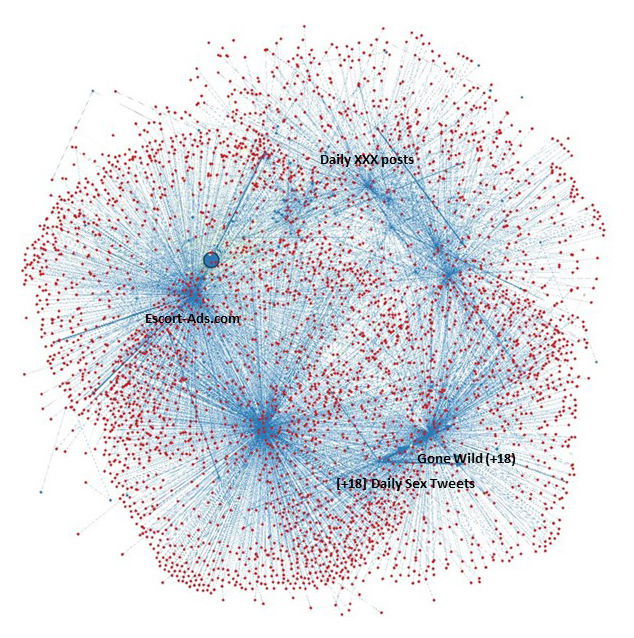

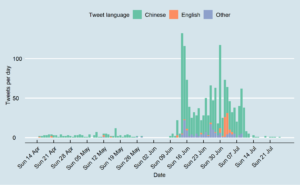

A report from Canberra-based think tank Australian Strategic Policy Institute (ASPI) published today (Sept. 3) said that some Twitter accounts at the center of Beijing’s recent online campaign had been tweeting a range a content including pornography, soccer, and K-pop prior to disseminating content about Hong Kong. At least two of the four accounts with the most retweets were related to pornography.

Australian Strategic Policy Institute (ASPI)

The report – Tweeting through the Great Firewall – cites Beijing propaganda outlets disseminating such tweets as: Freedom without a bottom line is by no means a blessing; democracy without the rule of law can only bring disaster and chaos. Although Hong Kong has a good financial background, it can’t afford to vacillate. It can’t take all of this internal friction and maliciously created agitation, which will only ruin Hong Kong’s future. The rule of law is the core value of Hong Kong.

Instagram will likely be the main social media platform used to disseminate disinformation during the 2020 election, while altered “deepfake” videos of candidates will pose a threat as well, according to a report out on Wednesday, The Hill reports.

The report on disinformation tactics during the 2020 election, put together by New York University’s (NYU) Stern Center for Business and Human Rights, pinpoints China, Russia, and Iran as countries likely to launch such attacks against the U.S. in the lead up to the elections.

The report on disinformation tactics during the 2020 election, put together by New York University’s (NYU) Stern Center for Business and Human Rights, pinpoints China, Russia, and Iran as countries likely to launch such attacks against the U.S. in the lead up to the elections.

Tech firms’ policies need to change to prepare for escalating disinformation threats ahead of the next election, said Paul M. Barrett, the NYU professor who wrote the report, Disinformation and the 2020 Election: How the Social Media Industry Should Prepare. Social media firms have to do everything they’ve been doing and more to prepare for the ever-changing threat, he told The Post’s Cat Zakrzewski, proposing nine recommendations for how companies should get ready for disinformation in 2020:

1. Invest in detecting and removing deepfake videos — before regulators step in and require companies to do so. …Earlier this year, Google announced a project to invest in research on fake audio detection, and Barrett says other companies should follow.

2. Take down content that can “definitively be shown to be untrue.” In his boldest proposal, Barrett argues companies are increasingly taking down other types of problematic content, like hate speech and posts deemed to be aimed at voter suppression. ..

3. Hire a “content overseer” reporting directly to the chief executive and chief operating officer. Barrett says right now, responsibility for content decisions tends to be “scattered” across many different teams at the tech companies. …

4. Increase defenses against misinformation at Instagram…. Barrett says it’s time for Instagram and its parent company Facebook to come up with a clearer strategy for approaching disinformation, especially as he predicts phony memes could be a key tool bad actors deploy in 2020.

EU vs Disinfo

5. Restrict message forwarding even more at WhatsApp. Earlier this year, Facebook instituted a five-time limit on message forwarding to WhatsApp groups to ensure rumors and fake news don’t fly rampant. Barrett thinks this is a good first step, but he recommends the company go farther …

6. Pay more attention to the growing threat of for-profit disinformation campaigns. For-profit disinformation services have gone global and are becoming more sophisticated, Barrett warns….

7. Support legislation to increase political ad transparency on social media. Barrett thinks it’s time for the tech industry to throw its “considerable lobbying clout” behind bills like the Honest Ads Act, which would require tech companies to be more transparent about who is paying for political ads on their platforms. …

8. Collaborate more. The companies should create a permanent intercompany task force focused on disinformation…

9. Make social media literacy a prominent and permanent feature on their websites. …[S]ocial media companies have made positive strides in supporting digital literacy programs that educate the public about disinformation, like supporting classes at school for children and teens. But he says the companies could post a reminder to their users about the threat of disinformation every time they log in.

“The more often users are reminded of this fact — and are taught how to distinguish real from fake — the less influence false content will wield,” Barrett writes in the report. “Concise, incisive instruction, possibly presented in FAQs format, should be just one click away for all users of all of the platforms, all of the time.”

“The more often users are reminded of this fact — and are taught how to distinguish real from fake — the less influence false content will wield,” Barrett writes in the report. “Concise, incisive instruction, possibly presented in FAQs format, should be just one click away for all users of all of the platforms, all of the time.”

Michael Posner, the director of NYU’s Stern Center, said that “taking steps to combat disinformation isn’t just the right thing to do, it’s in the social media companies’ best interests as well…..Fighting disinformation ultimately can help restore their damaged brand reputations and slows demands for governmental content regulation, which creates problems relating to free speech,” he added. RTWT

The US government believes that fake news and social media posts are becoming such a threat to the country’s security that its military is building its own software to repel “large scale, automated disinformation attacks,” The {London] Times adds:

Darpa, the defence department’s research agency, is developing a programme that can detect fake news stories buried among more than 500,000 reports, videos, photos and audio recordings. It will go through a four-year training phase, according to Bloomberg News, where it will be fed hundreds of thousands of news articles and social media posts, some of which are fake, to build algorithms that can detect what is genuine.

Darpa, the defence department’s research agency, is developing a programme that can detect fake news stories buried among more than 500,000 reports, videos, photos and audio recordings. It will go through a four-year training phase, according to Bloomberg News, where it will be fed hundreds of thousands of news articles and social media posts, some of which are fake, to build algorithms that can detect what is genuine.

Concern is growing that fake news is polarising society and jeopardising democracy, with the ability to hinder elections, by rapidly spreading misinformation. The software is designed to spot subtle details in a fake news story or video…..The move comes as a “deepfake” Chinese video app has shot to the top of the download chart in China, prompting concerns about how good technology is becoming at producing highly realistic fake videos.

Source: StopFake

Long before its interference in the US election, “Russia had honed its tactics in Estonia, followed soon after by attempts, with varying degrees of success, to disrupt the domestic politics of Georgia, Kyrgyzstan, Kazakhstan, Finland, Bosnia and Macedonia,” analyst Joshua Holland writes:

While those efforts were on behalf of politicians and parties that were seen as advancing the Kremlin’s interests, and weren’t strictly ideological, in most cases they resulted in support for far-right ethno-nationalists. …More recently, Russia appears to have assisted the Yes campaign leading up to the U.K.’s Brexit referendum and is alleged to have offered financial and other assistance to a whole string of far-right, anti-immigrant parties across Western Europe.

EU VS DISINFO

Of the Kremlin’s favoured disinformation narratives, perhaps the most enduring is whataboutism about the supposedly persistent evils of Western imperialism and meddling around the world, EU vs Disinfo reports:

As we have explained previously, a core component of the Kremlin’s global disinformation strategy is to deflect attention from its own crimes and authoritarian behaviour by pointing fingers at others and accusing them of the same transgressions – and typically resorting to gross misrepresentations or outright lies to make the point. In many cases, this tactic is also accompanied by efforts to paint Russia as a victim, unfairly targeted by Russophobia and attempts to undermine its sovereignty and/or international standing.

In this way, the Kremlin kills two birds with one stone: it conjures the image of a belligerent and imperialist West, thus manufacturing a fictional adversary that provides convenient cover for Moscow to justify its illicit activities on grounds of “defence” and self-preservation, StopFake adds. The goal, as ever, is to relativize truth and undermine liberal-democratic claims to moral superiority by suggesting that the West is equally bad, if not worse, than Russia. This week’s cases exemplify the impressive variety of ways that the pro-Kremlin disinformation machine adapts this narrative frame to different situations and contexts.

The rapid proliferation of censorship and surveillance technology around the world is threatening human rights, the NED’s Center for International Media Assistance adds. Authored by Open Technology Fund Information Controls Fellow Valentin Weber, a new research report to be released at the following event tracks the export of Chinese and Russian censorship and surveillance technology around the world, providing fresh insight into the importance of telecommunications infrastructure to modern authoritarianism.

The rapid proliferation of censorship and surveillance technology around the world is threatening human rights, the NED’s Center for International Media Assistance adds. Authored by Open Technology Fund Information Controls Fellow Valentin Weber, a new research report to be released at the following event tracks the export of Chinese and Russian censorship and surveillance technology around the world, providing fresh insight into the importance of telecommunications infrastructure to modern authoritarianism.

Welcome and Opening Remarks:

.

Daniel O’Maley, Deputy Editor and Digital Policy Specialist, Center for International Media Assistance

Speakers:

Natalia Arno, President, Free Russia Foundation

Ron Deibert, Director, The Citizen Lab

David Kaye, U.N. Special Rapporteur for Freedom of Expression

Valentin Weber, University of Oxford and OTF Information Controls Fellow

Moderator: Laura Cunningham, Principal Director, Open Technology Fund

Tuesday, September 17, 10:00-11:30am EDT

Light refreshments served at 10:00am

National Endowment for Democracy, 1025 F Street NW, Suite 800, Washington, DC 20004. RSVP

Stern Center for Business and Human Rights