The role of social media platforms and messaging apps in Sunday’s political violence in Brasília is under the spotlight after experts highlighted their use by Jair Bolsonaro supporters to question the presidential election result and organize the protests, The Guardian reports:

Nina Santos, a postdoctoral fellow at Brazil’s National Institute of Science and Technology in Digital Democracy, said the insurrection by radical Bolsonaristas was organized first on private messaging networks, such as Meta-owned WhatsApp and Telegram, before moving to public platforms for more visibility…. “There are strategies for this movement to be visible to those who are interested in it, but for it to remain under the radar of general monitoring efforts,” said Santos, citing the use of the expression “Festa da Selma” as a code to mobilise protesters without raising suspicions.

Eurasia Group

In 1989, the US was the world’s leading exporter of democracy, the Eurasia Group observes. Today, it is the leading exporter of tools that undermine democracy—the result of algorithms and social media platforms that rip at the fabric of civil society while maximizing profit, creating unprecedented political division, disruption, and dysfunction. That trend is accelerating fast—not driven by governments but by a small collection of individuals with little understanding of the social and political impact of their actions, it adds:

Is the tech centibillionaire a bigger threat to global instability than Putin or Xi? It’s unclear but the right question to ask and a critical challenge for the world’s democracies, highlighting the vulnerability of representative political institutions and the growing allure of state control and surveillance. As we showed with the J Curve back in 2006, open societies were the most stable, in part because technology strengthened them and weakened authoritarian regimes. In 2023, less than two decades later, the opposite is true. ….It’s not the end of democracy (nor of NATO or the West). But we remain in the depths of a geopolitical recession, with the risks this year the most dangerous we’ve encountered in the 25 years since we started Eurasia Group.

New technologies, including generative artificial intelligence (AI) will be a gift to autocrats bent on undermining democracy abroad and stifling dissent at home, the report adds:

Political actors will use AI breakthroughs to create low-cost armies of human-like bots tasked with elevating fringe candidates, peddling conspiracy theories and “fake news,” stoking polarization, and exacerbating extremism and even violence—all of it amplified by social media’s echo chambers. …The irony is that America’s fertile ground for innovation—nurtured by its representative democracy, free markets, and open society—has allowed these technologies to develop and spread without guardrails, to the point that they now threaten the very political systems that made them possible.

In the private sector, two approaches to the governance of generative AI models are currently emerging, the World Economic Forum’s Benjamin Larsen and Jayant Narayan report:

- In one camp, companies such as OpenAI are self-governing the space through limited release strategies, monitored use of models, and controlled access via API’s for their commercial products like DALL-E2.

- In the other camp, newer organizations, such as Stability AI, believe that these models should be openly released to democratize access and create the greatest possible impact on society and the economy. Stability AI open sourced the weights of its model – as a result, developers can essentially plug it into everything to create a host of novel visual effects with little or no controls placed on the diffusion process.

The Global Coalition for Digital Safety is bringing together a diverse group of leaders to accelerate public-private cooperation to tackle harmful content and conduct online, the World Economic Forum reports. Members of the coalition have developed the Global Principles on Digital Safety, which define how human rights should be translated in the digital world. The principles, which will serve as a guide for all stakeholders in the digital ecosystem to advance digital safety on policy, industry and social levels, include:

The Global Coalition for Digital Safety is bringing together a diverse group of leaders to accelerate public-private cooperation to tackle harmful content and conduct online, the World Economic Forum reports. Members of the coalition have developed the Global Principles on Digital Safety, which define how human rights should be translated in the digital world. The principles, which will serve as a guide for all stakeholders in the digital ecosystem to advance digital safety on policy, industry and social levels, include:

-

All supporters should collaborate to build a safe, trusted and inclusive online environment. Policy and decision-making should be made based on evidence, insights and diverse perspectives. Transparency is important, and innovation should be fostered.

-

Supportive governments should distinguish between illegal content and content that is lawful but may be harmful and differentiate any regulatory measures accordingly, ensure law and policy respect and protect all user rights. They should support victims and survivors of abuse or harm.

-

Supportive online service providers should commit to respecting human rights responsibilities and devise policies to ensure they do, ensure safety is a priority throughout the business and that it’s incorporated as standard when designing features, and collaborate with other online service providers.

In partnership with Audible, Coda presents ‘Undercurrents: Tech, Tyrants and Us‘ (below), a new podcast series featuring eight stories from around the world of people caught up in the struggle between tech, democracy and dictatorship.

In the context of Western democracies, threats and issues related to these tools are frequently viewed as problematic, Salla Westerstrand, an AI ethicist focusing on intersections between AI and democracy, writes for The Gradient:

- On the one hand, AI technologies are shown to help include more people in collective decision-making and potentially decrease the cognitive bias occurring when humans make decisions, leading to fairer outcomes.

- On the other hand, studies indicate that certain AI technologies can lead to biased decisions and decrease the level of human autonomy in a way that threatens our fundamental human rights.

Other analysts predict that AI will have dystopian political consequences.

Other analysts predict that AI will have dystopian political consequences.

“I think democracy will be replaced. Governments and corporations will seek to enshrine their own philosopher king,” one expert tells Conor Friedersdorf, a staff writer at The Atlantic:

This battle will be won by an AI philosopher-king bot, which created itself. AI will explain to human beings that a body is not necessary for the evolution of intelligence. In other words, human beings will no longer be relevant. AI will have no empathy, no emotions, and no morality. Paradoxically, AI will explain to human beings that this thing called consciousness requires a human body. Consciousness is embedded in human evolution (and nowhere else). Therefore, to build a humanlike intelligence and a humanlike consciousness requires that human bodies be used as the substrate. AI will begin to build humanlike bodies from single cells (using a philosopher-king source code) but will quickly learn the energetic and DNA codes that morph into the human form. AI will build the next generation of humans.

Artificial intelligence has “turbo-charged” existing forms of repression, giving authoritarian regimes disturbing new “social engineering tools” to monitor and shape citizen behavior, says Stanford University’s Eileen Donahoe, a National Endowment for Democracy (NED) board member.

Artificial intelligence has “turbo-charged” existing forms of repression, giving authoritarian regimes disturbing new “social engineering tools” to monitor and shape citizen behavior, says Stanford University’s Eileen Donahoe, a National Endowment for Democracy (NED) board member.

“We are in a very dark place,” she told the World Movement for Democracy’s 11th Assembly, as authoritarians are not only exporting repressive technologies, but diffusing ideas, norms and narratives within international forums in an effort to shape the future global information order, presenting an “existential threat” to democracy.

To address the challenge of AI surveillance, democracies need to undertake several major tasks simultaneously, Carnegie Endowment senior fellow Steven Feldstein observes in an essay for NED’s Forum:

- First, they must define regulatory norms to guide responsible AI use, whether through national AI strategies and legislation or through regional efforts.

- To ensure that this norm-setting occurs democratically and reflects the concerns of affected groups, citizens must have more opportunities to be involved in the deliberation process.

- Finally, democratic governments need to form coalitions of like-minded states to advance shared digital values.

Aspen Institute

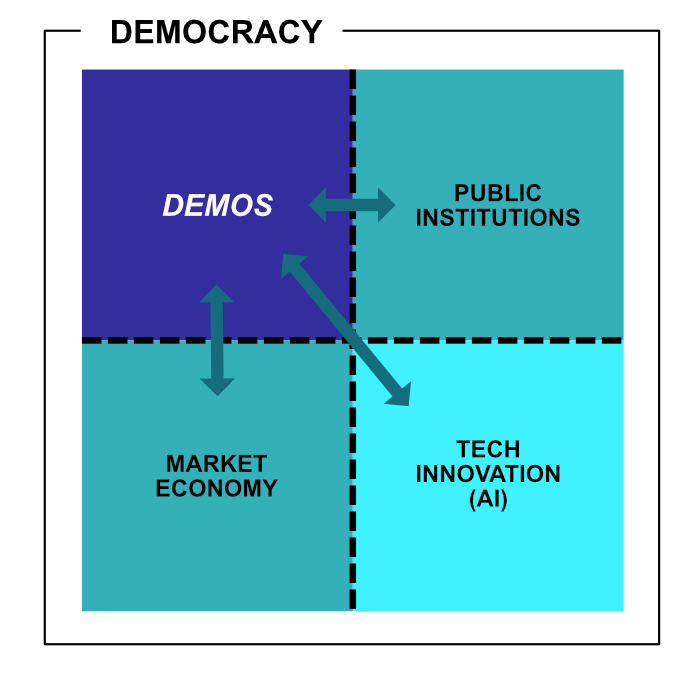

Whereas AI offers tools for facilitating citizen participation in collective decision-making, strengthening political deliberation and legitimacy, recent research implies that certain aspects of the current direction of AI development could have adversarial effects on demos, Westerstrand adds:

- First, that using AI could increase harmful bias in collective decision-making and encourage discrimination of minorities. This can threaten both citizenship-based and identity-based conceptions of demos and undermine the very principle of equality.

- Second, human autonomy that forms the foundations of the rule by the people can be weakened with AI-enhanced nudging and artificial content creation.

- Lastly, the AI-fueled data economy could cause an unintended dispersion of national demoi due to the shift in power from democratic governments to non-democratic global tech giants.

Some organizations, such as Stability AI, believe that #generativeAI models should be openly released to #democratize access and create the greatest possible impact on society and the economy, @WEforumGroup reports. https://t.co/6soGBIdyIY via @social_handles.twitter

— Democracy Digest (@demdigest) January 9, 2023