The post-truth world of alternative facts, deepfakes and other digitally disseminated disinformation is the territory explored by Samuel Woolley, an assistant professor in the school of journalism at the University of Texas, in The Reality Game. Woolley uses the term ‘computational propaganda’ for his research field, and argues that “The next wave of technology will enable more potent ways of attacking reality than ever”, analyst Charles McLellan writes for ZDNet:

Woolley takes a tour of the past, present and future of digital truth-breaking, tracing its roots from a 2010 Massachusetts Senate special election, through anti-democratic Twitter botnets during the 2010-11 Arab Spring, misinformation campaigns in Ukraine during the 2014 Euromaidan revolution, the Syrian Electronic Army, Russian interference in the 2016 US Presidential election, the 2016 Brexit campaign, to the upcoming 2020 US Presidential election. He also notes examples where online activity — such as rumours about Myanmar’s Muslim Rohingya community spread on Facebook, and WhatsApp disinformation campaigns in India — have led directly to offline violence.

By writing The Reality Game, Woolley wants to empower people: “The more we learn about computational propaganda and its elements, from false news to political trolling, the more we can do to stop it taking hold,” he says. Shining a light on today’s “propagandists, criminals and con artists”, can undermine their capacity to deceive. RTWT

By writing The Reality Game, Woolley wants to empower people: “The more we learn about computational propaganda and its elements, from false news to political trolling, the more we can do to stop it taking hold,” he says. Shining a light on today’s “propagandists, criminals and con artists”, can undermine their capacity to deceive. RTWT

On Tuesday, Jigsaw, a company that develops cutting-edge tech and is owned by Google’s parent, unveiled a free tool that researchers said could help journalists spot doctored photographs — even ones created with the help of artificial intelligence, writes for The New York Times:

Jigsaw, known as Google Ideas when it was founded, said it was testing the tool, called Assembler, with more than a dozen news and fact-checking organizations around the world. They include Animal Politico in Mexico, Rappler in the Philippines and Agence France-Presse.

Assembler brings together multiple image manipulation detectors from various academics into one tool, each one designed to spot specific types of image manipulations, adds Jared Cohen, Jigsaw’s CEO and founder. Individually, these detectors can identify very specific types of manipulation — such as copy-paste or manipulations to image brightness. Assembled together, they begin to create a comprehensive assessment of whether an image has been manipulated in any way, he writes for Medium.

Originally established in 2010 as Google Ideas, a “think/do tank” to research issues at the intersection of technology and geopolitics, Jigsaw is also announcing a “research publication” called The Current, where people can access information about disinformation and Jigsaw’s and its partners’ efforts to fight it, FastCompany adds.

Originally established in 2010 as Google Ideas, a “think/do tank” to research issues at the intersection of technology and geopolitics, Jigsaw is also announcing a “research publication” called The Current, where people can access information about disinformation and Jigsaw’s and its partners’ efforts to fight it, FastCompany adds.

“We want to be working with the people who are on the front lines of countering disinformation, and we’re really trying to look around the next corner so that we can build and ship tools that can address future manifestations of the problem,” says Jigsaw’s Cohen. “It’s a prodigious problem.”

One feature of the inaugural issue is the Disinformation Data Visualizer, Cohen adds. Jigsaw visualized the research from the Atlantic Council’s DFRLab on coordinated disinformation campaigns around the world and shows the specific tactics used and countries affected.

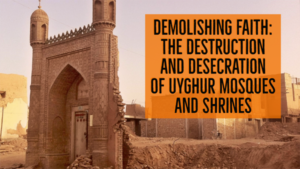

A 403-page report by The New York Times detailing China’s pressure campaign on Muslim ethnic minorities in the province of Xinjiang means that concrete evidence is leaking contrary to Beijing’s attempts at disinformation, notes Hyunmu Lee. The United States should focus on not only refuting China’s denials of re-education camps for alleged “terrorist” Uighurs, but also make an official statement about the Chinese Communist Party’s (CCP) disinformation campaign, Lee writes for The National Interest.

A 403-page report by The New York Times detailing China’s pressure campaign on Muslim ethnic minorities in the province of Xinjiang means that concrete evidence is leaking contrary to Beijing’s attempts at disinformation, notes Hyunmu Lee. The United States should focus on not only refuting China’s denials of re-education camps for alleged “terrorist” Uighurs, but also make an official statement about the Chinese Communist Party’s (CCP) disinformation campaign, Lee writes for The National Interest.

Russia used the internet and social media’s capacity to amplify and exacerbate existing discontent and magnify their traditional efforts at disinformation, adds James Andrew Lewis, director of the Technology Policy Program at the Center for Strategic and International Studies. Research on the political effect of the internet suggests that fake news and other social media campaigns do not change people’s minds. They confirm existing beliefs and exacerbate existing fears and conflicts.

Russia used the internet and social media’s capacity to amplify and exacerbate existing discontent and magnify their traditional efforts at disinformation, adds James Andrew Lewis, director of the Technology Policy Program at the Center for Strategic and International Studies. Research on the political effect of the internet suggests that fake news and other social media campaigns do not change people’s minds. They confirm existing beliefs and exacerbate existing fears and conflicts.

But Russian experts are convinced that Moscow is actually losing the current information war, notes analyst Paul Goble.

In a recent article for Svobodnaya Pressa bluntly entitled “The Information War: Why We Are Losing to the West,” Yury Piskulov says that whatever successes Moscow has had in the information competition with the West, it has suffered far more losses and must address its shortcomings because “the fourth estate” is an ever more important player in international competition, he writes for the Jamestown Foundation.

@WarOnTheRocks features a helpful analysis of Influence Operations and Active Measures: The History of Soviet and Russian Political Warfare in the West.

Perhaps no other domain is as pivotal as that of technology when it comes to authoritarian sharp power’s impact on democracy, according to the National Endowment for Democracy’s Christopher Walker, Shanthi Kalathil and Jessica Ludwig. Technological interdependence has enabled modernizing authoritarians to reach across borders to censor and manipulate public discourse, sharpen polarization, and undermine democracy, they write for The Journal of Democracy.

Perhaps no other domain is as pivotal as that of technology when it comes to authoritarian sharp power’s impact on democracy, according to the National Endowment for Democracy’s Christopher Walker, Shanthi Kalathil and Jessica Ludwig. Technological interdependence has enabled modernizing authoritarians to reach across borders to censor and manipulate public discourse, sharpen polarization, and undermine democracy, they write for The Journal of Democracy.

The International Foundation for Electoral Systems (IFES), based in Arlington, Virginia, seeks a Senior Global Advisor on Cybersecurity, Policy and Democracy (HT: Global Jobs)